This is the final part of our Straight Through Processing (STP) series. If you’ve read the preceding articles on STP, they provide a solid basis for understanding what STP is and the key factors involved in assessing STP opportunities within your own document-oriented processes.

Practical Steps for a PoC to Assess STP

These practical steps for conducting a Proof of Concept (PoC) offer guidance on assessing the straight through processing (STP) potential of any intelligent capture system. The main value provided by intelligent capture software is the ability to extract as much unstructured, document-oriented information as possible at the highest levels of accuracy. So while the user experience and operational management capabilities are also important, if the software fails to deliver high levels of accurate data, then you might as well stay on a manual process. When we need to accurately measure a system, we need good test data with which to observe system performance. This is called “ground truth data.”

First Step: Test Data

Ground truth data is essentially sample data that has the answer key. For instance, if you plan to test and compare the ability to process invoice data, then the ground truth data will consist of samples of invoices along with the actual value of each field you wish to extract for each sample invoice. Each sample would look something like this:

File Name: Invoice1234.PDF

Invoice Date: 9/13/2019

Invoice Number: 123456

Invoice Amount: 2112.00

Second Step: Gather Test Data

Your test data should be taken from real production examples. While artificial test data could potentially substitute for real data, it is typically insufficient to adequately represent the true nature of your documents.

The amount of test data you need to reliably measure any system realistically depends on the amount of variance or differences observed for each document type. The more test data you have, the more accurate your measurements will be. 500 samples should be a bare minimum in order to reliably understand if a given system will actually perform in production the way it performs in testing.

Step Three: Configuring the System

Generally, it is far from practical to configure systems on your own. While all intelligent capture is designed to supply structured data from unstructured documents, the manner in which you configure systems can vary widely. Therefore, the ability to learn a number of systems to the degree at which you can configure a highly-tuned system is not realistic. This is where the vendors come in. They understand their software better than anyone and can provide the best support in terms of configuring a system for a test run; so it is always best if possible to have the vendor supply the configuration.

One word of caution, however. It is possible to create a configuration that works well in a test, but is not practical to use in production. To put it bluntly, a PoC can be gamed. For instance, if your need is to classify a wide variety of documents such as mortgage loan files, it is possible to configure a system using rules or templates based on sample data that will not work well in a real-world production environment where the variety of documents goes well beyond what is used in a PoC.

There are two ways to deal with this. The first is to always ask for information regarding how the software was configured to understand if the configuration will only work for the PoC or if the configuration methodology would also work in production. The second is to create two sample sets that are similar in characteristics (e.g., number of samples, similar amounts of variance, similar document types, etc.), but are completely different sets of files. Provide one set, called the “training set” to the vendor and then use the other set, the “test set” to actually test the system.

You will want to provide a reasonable amount of time between providing the training set and actually conducting the test run; maybe a couple of weeks at most depending on the complexity of the PoC. Simple data extraction PoCs should only require a week or less of configuration and preparation by the vendor.

Step Four: Conducting the Test

The big day has arrived, or has it? Technically conducting the test is not the main focus; rather examining the results is the main priority. Conducting the test should merely be the day you provide the test set to the vendor. The vendor receives this set and processes the results. Be clear with the vendor regarding how much time is allowed to produce results. What you do not want is to have the vendor re-configure the system to improve actual results. One way you can reduce the likelihood of gamesmanship is to ask for the configured software to be delivered to you where the software is installed in an environment you control. From there, the vendor can be authorized to run the test data through the system only with no room for chicanery.

Step Five: Analyzing the Results

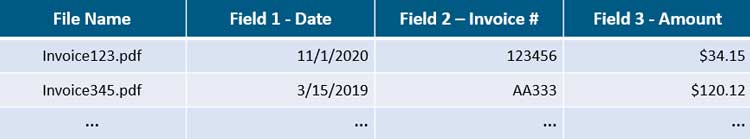

Analysis of the results is the big focus since the true value of an intelligent capture system is delivering the most data with the highest levels of accuracy. Because you have your ground truth data for your test deck, you have a very easy way of comparing each system’s output in an apples-to-apples manner. Ask for the results to be delivered in a simple structured format such as:

Each cell of the table is the actual value of the file name and corresponding field. From here you can directly compare each system’s structured results with your answer key to identify the total accuracy of the system. The total system accuracy is the number of total data fields divided by the number of accurate data fields output or:

# of Accurate Output Fields

—————————————————- = System Accuracy

Total # of all Fields

For instance, if your sample set of 500 invoices has 1500 total fields (one each for date, invoice number and amount), and System A outputs 800 accurate fields, then System A Accuracy = 800/1500 or 53%.

Once you have compared each system’s output to the answer key, you have a solid understanding of accuracy for each system. If some data fields are more important than others, using the same comparison method, you can understand accuracy at a field level calculating, for instance, the accuracy of Invoice Amount by dividing the total number of accurate output for Amount by the total of amount fields.

Is that All?

If your goal is to reduce the amount of data entry (but not the amount of data verification), the analysis described in Step 5 will help you understand how much data entry can be avoided. However, if your goal is to completely avoid even having staff review output, you need to use another piece of data: the confidence score. In Part 6, we discussed how confidence scores can be used to understand how much data can be processed with no need to review it. We do that by using a sorted list of output and confidence scores (sorted by confidence score) at a data field level, to identify the confidence score threshold. It is this threshold that determines whether data should be reviewed by staff or proceed straight through with no review.

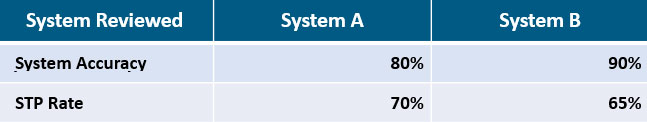

The process is just like it is described in Part 6 with the exception that you perform this analysis on each system’s output. You may find that one system produces less overall system accuracy, but has the ability to provide more straight through processing. For instance, after reviewing output by system accuracy and confidence score, we might find the following:

So how can System A deliver higher STP with a lower system accuracy? The answer all comes down to the reliability of confidence scores. It is possible that System A is able to associate higher confidence scores with accurate data in a more reliable way than System B. So even though System B’s overall output is more accurate, it cannot tell with much precision, which of that data is accurate. This forces a higher amount of accurate data to be verified by staff.

Summary: Data Entry Savings vs. Savings from STP

The ultimate decision to focus on data entry savings vs. savings from STP is made on business needs including the nature of a given process. Every PoC or evaluation of intelligent capture should always and objectively measure system accuracy. Without a solid understanding of that single data point, you will have no understanding of system capability and ultimately the potential of success for your projects.

Past articles on STP have introduced: (1) the concept of attended and unattended automation; (2) why STP is important; (3)how to achieve high STP in document automation; (4) examples of what it looks like; (5) how to accurately assess STP; (6) how STP works and the technology behind it; and (7) examines the reliability of OCR and capture tools.

If you found this article interesting, you might find this eBook and webinar useful focused on how to develop a successful proof of concept project to evaluate and select the right technology for your document automation.