There are a lot of abbreviations and market-speak when it comes to the variety of technologies commercially available to solve the image-to-data problem. OCR, ICR, ACR, NHR, etc. Then there are specific applications of these technologies that further complicate things. Abbreviations like CAR/LAR, MICR, etc.

So what are the major differences between OCR and ICR and why is one set of technologies not always best suited for all problems?

When we talk about OCR, we are really talking about converting data from an image that was created by a machine, whether it be a document created by an office application, and even an old document typewritten. In every case, the information is very high quality and very consistent. So OCR technologies were built to compare the letter on the image to the letter within a database. If it’s the same, then great. In this situation, the letter “A” always looks like the letter “A”. Most of the time the only variant being the font in which that “A” is written. So most OCR technologies are sensitive to fonts. So all OCR packages pretty much have a very large database of fonts and a few have technologies that allow for the font to be factored-out of the equation entirely. This is called “omnifont”.

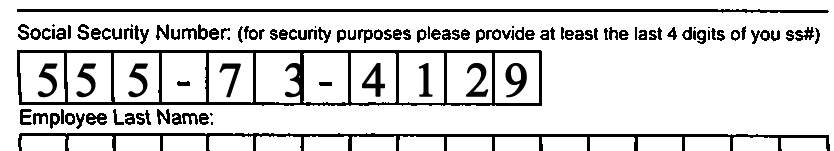

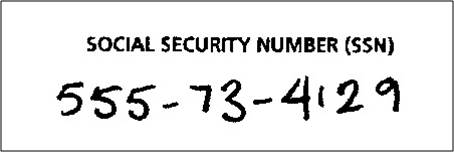

In most cases, OCR does not really require the assistance of 3rd party databases due to the quality and high-standardization of the information. So to recognize the numbers in a typed social security number is no more difficult than to recognize the numbers describing the length of a 2×4. However, because many OCR technologies rely on matching a character to a pre-built database, the presence of “noise” such as a comb or box that could not be removed or ignored can impact the quality of the results. For instance, if a 3 is typed within a box that touches or crosses over that box, the technology, especially without context, would have difficulties arriving at the correct value.

In most cases, OCR does not really require the assistance of 3rd party databases due to the quality and high-standardization of the information. So to recognize the numbers in a typed social security number is no more difficult than to recognize the numbers describing the length of a 2×4. However, because many OCR technologies rely on matching a character to a pre-built database, the presence of “noise” such as a comb or box that could not be removed or ignored can impact the quality of the results. For instance, if a 3 is typed within a box that touches or crosses over that box, the technology, especially without context, would have difficulties arriving at the correct value.

OCR:

- Most basic OCR compares image samples

- OCR is sensitive to fonts

- More advanced OCR uses neural nets to handle “harder fonts”

- Does not rely on context although it can help

- Is impacted by image and zone quality

For ICR, pattern-matching goes to a different level. You cannot really have success deploying a solution requiring ICR without additional information to aid in the recognition process. Using that same SSN example, if the SSN is written in an open field, you most likely need to assist the recognition by providing the data type to look at or a pattern for it to match. So ICR needs more data to really be successful. Fortunately this type of additional context can be easily supplied within the technology itself through the form of vocabularies or business rules or via customer-supplied data. ICR is also more sensitive to image and zone quality since there is typically no standard reference to the characters or words as in OCR.

For ICR, pattern-matching goes to a different level. You cannot really have success deploying a solution requiring ICR without additional information to aid in the recognition process. Using that same SSN example, if the SSN is written in an open field, you most likely need to assist the recognition by providing the data type to look at or a pattern for it to match. So ICR needs more data to really be successful. Fortunately this type of additional context can be easily supplied within the technology itself through the form of vocabularies or business rules or via customer-supplied data. ICR is also more sensitive to image and zone quality since there is typically no standard reference to the characters or words as in OCR.

ICR:

- Cannot rely on image samples alone

- Has heavier reliance on context

- Can be severly impacted by image and zone quality

Learn more: